AI Code Review vs. Manual Review: Key Differences

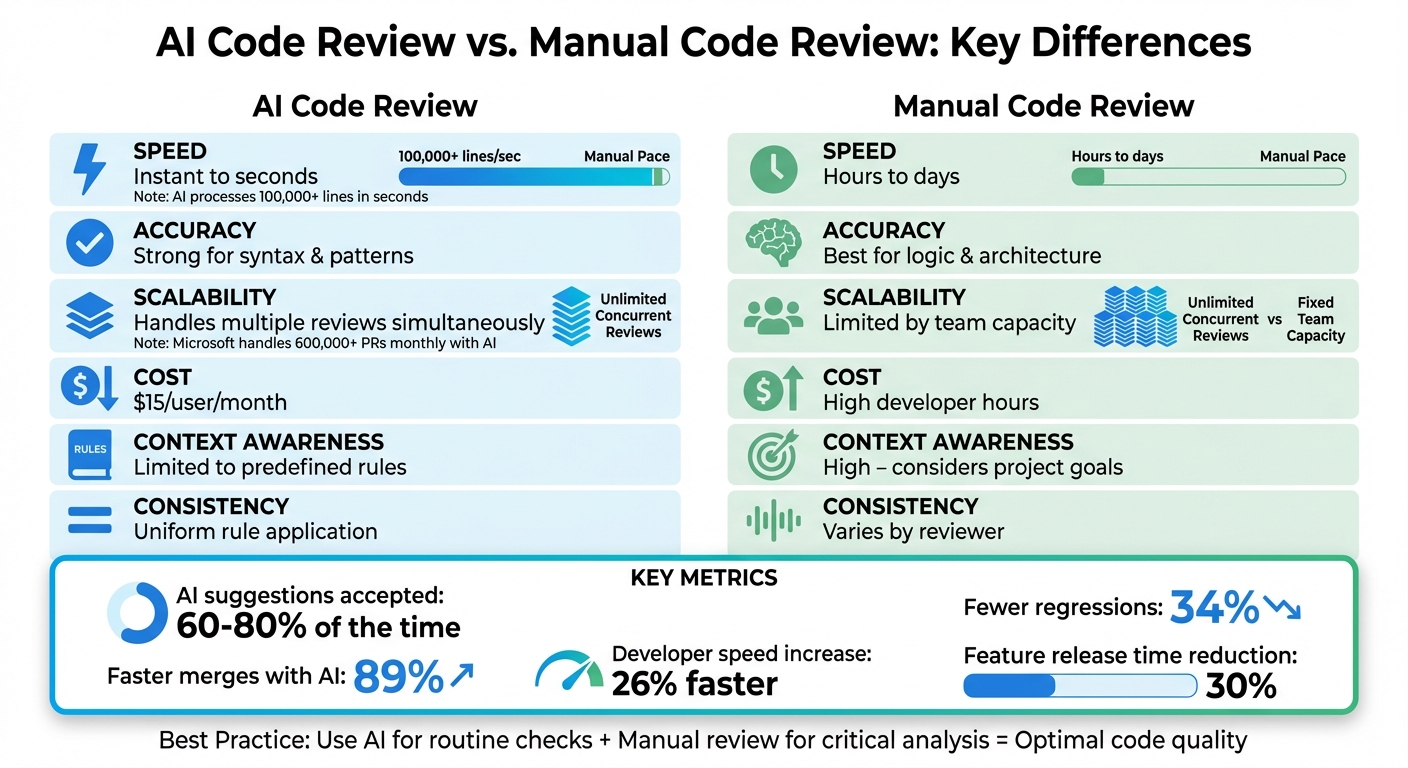

AI code reviews and manual reviews each play a distinct role in improving software quality. AI excels at speed, consistency, and handling repetitive tasks like identifying syntax errors or common security flaws, often acting as a fully-automated QA engineer. Manual reviews, on the other hand, bring human judgment, context, and expertise to assess business logic, architectural decisions, and complex scenarios.

Key Takeaways:

- AI Strengths: Fast analysis, scalability, and uniform application of rules. Ideal for catching routine issues and integrating into continuous testing in CI/CD pipelines.

- Manual Review Strengths: Deep understanding of code intent, architectural oversight, and mentorship opportunities. Essential for high-risk areas like security and long-term maintainability.

- Hybrid Approach: Combining AI and manual reviews ensures efficiency without sacrificing depth. AI handles routine checks, while humans focus on nuanced, critical validation.

Quick Comparison:

| Factor | AI Code Review | Manual Code Review |

|---|---|---|

| Speed | Instant feedback | Hours to days |

| Accuracy | Strong for syntax & patterns | Best for logic & architecture |

| Scalability | Handles multiple reviews at once | Limited by team capacity |

| Cost | Lower (e.g., $15/user/month) | Higher (developer hours) |

| Context Awareness | Limited to predefined rules | High, considers project goals |

| Consistency | Uniform rule application | Varies by reviewer |

To get the best results, use AI tools for quick, large-scale checks and manual reviews for critical, context-driven analysis. Together, they create a balanced, efficient workflow.

AI vs Manual Code Review: Speed, Cost, and Accuracy Comparison

Best Automated Code Review Tools in 2025🔥 | AI Pull Request Review Comparison

sbb-itb-7ae2cb2

AI Code Review vs. Manual Review: Side-by-Side Comparison

Choosing between AI and manual code reviews depends on understanding how each performs across key aspects of the development process. The table below compares their strengths and limitations to help you make informed decisions.

| Factor | AI Code Review | Manual Code Review | Practical Notes |

|---|---|---|---|

| Speed | Instant to seconds | Hours to days | AI can process over 100,000 lines of code in seconds, while manual reviews depend on reviewer availability. |

| Accuracy | Strong at spotting syntax errors and common security flaws; limited with business logic | Excels in analyzing code logic and architecture; less consistent with simple patterns | AI is great for routine issues like style inconsistencies, but humans are better at finding complex problems like race conditions or architectural flaws. |

| Scalability | Excellent – handles multiple pull requests simultaneously | Limited by team size, which can create bottlenecks | AI maintains efficiency regardless of workload. |

| Cost | Low – subscription-based (e.g., $15/user/month) | High – reflects the cost of developer time | Large teams may spend thousands of developer-hours on manual reviews annually. |

| Context Awareness | Low – relies on predefined rules with limited understanding of project context | High – considers project history and business goals | Humans align reviews with long-term strategies, while AI focuses on specific rules. |

| Consistency | High – applies rules uniformly | Variable – depends on individual reviewer judgment | AI eliminates bias, but humans provide nuanced insights for complex scenarios. |

| Flexibility | Low – rigid, rule-based approach | High – adapts to unconventional but valid solutions | AI may flag edge cases as errors, while human reviewers account for context-specific exceptions. |

This comparison highlights how AI speeds up reviews by catching routine issues, such as syntax errors and style violations, while manual reviews are essential for evaluating business logic and architectural soundness.

AI's suggestions are typically accepted 60% to 80% of the time, meaning developers still need to evaluate and occasionally reject its recommendations. While AI can accelerate pull request merges by up to 89%, it can also increase average closure times - from about 5 hours 52 minutes to 8 hours 20 minutes - due to the extra time spent deliberating over its feedback. Fine-tuning AI tools to reduce false positives can help streamline this process.

"Automation accelerates you, but people give you perspective. The best pipelines have both." - JetBrains

Integrating AI with human oversight ensures the best of both worlds. AI handles repetitive tasks efficiently, while humans provide the nuanced judgment required for complex decisions. Tools like Ranger exemplify how combining these approaches can maximize code quality. A hybrid strategy leverages the strengths of each method, offering a balanced and effective approach to code reviews.

Benefits of AI Code Review

AI-driven code review tools revolutionize quality checks by analyzing thousands of lines of code in mere seconds. The moment a pull request is submitted, these tools provide immediate feedback. This rapid response is a game-changer in high-pressure development environments where delays can stall progress. The ability to work at such speed lays the groundwork for additional advantages in scalability and consistency.

One standout feature of AI is its scalability. Take Microsoft’s internal AI code review assistant as an example - it manages over 600,000 pull requests every month, covering 90% of the company’s pull requests. Unlike manual reviews, which are limited by human bandwidth, AI systems maintain consistent performance and throughput. They can handle multiple pull requests simultaneously without losing focus, ensuring the same level of scrutiny on the hundredth review as on the first. This consistency is especially effective in hybrid models where AI complements human expertise.

Another key advantage is the standardization of code review practices. For instance, AI excels at identifying security vulnerabilities, such as OWASP patterns like SQL injection and Cross-Site Scripting (XSS), as well as detecting leaked secrets and hardcoded tokens. Microsoft’s adoption of AI tools resulted in a 10% to 20% improvement in median pull request completion times across 5,000 repositories. This kind of reliability ensures that standards are uniformly applied, reducing the risk of errors slipping through.

AI code reviews also influence development culture in meaningful ways. Developers report feeling 20% more innovative when they receive quick feedback, as it allows them to move seamlessly to their next idea without losing momentum. By eliminating bottlenecks, AI tools can cut feature release times by up to 30%. Additionally, developers using AI assistance complete tasks 26% faster than those working without it. By handling routine checks - like verifying formatting or identifying simple bugs - AI frees up senior developers to focus on complex architecture and business-critical logic. This redistribution of effort enables teams to channel their energy into high-impact tasks that drive innovation and strategic growth.

"AI powered code reviews are a catalyst for transforming how we approach code reviews at scale. By combining the power of large language models with the rigor of human workflows, it empowers developers to write better code faster." - Sneha Tuli, Principal Product Manager, Microsoft

Benefits of Manual Review

AI tools are unmatched in speed and volume, but human reviewers bring critical judgment and context that machines simply can't replicate. Manual reviews excel in areas where understanding the "why" behind code changes is more important than just identifying patterns. In fact, a 2024 survey revealed that 36% of organizations consider code reviews the most effective way to improve code quality. Human reviewers can interpret business requirements, weigh architectural trade-offs, and analyze project risks to ensure the code addresses the intended problem. This level of insight is essential for creating robust architecture and maintaining security in complex projects.

One of the standout advantages of manual review lies in preserving architectural integrity and long-term maintainability. Human reviewers are adept at spotting design patterns that may lead to problems down the line, ensuring new components integrate seamlessly into the system's architecture. For example, they can verify that services rely on approved APIs instead of directly accessing databases. Research from IEEE highlights that while automated tools are excellent at catching repetitive technical errors, manual reviews are far better at identifying nuanced architectural and design challenges. This human touch complements the speed and consistency of AI tools.

When addressing complex security threats, manual reviews are indispensable. Human reviewers excel at evaluating operational context, uncovering issues like improper authorization checks, flawed payment processes, and race conditions - problems that static rules often overlook. As Jeff Atwood, Co-Founder of Stack Overflow, famously said:

"You'll quickly find that every minute you spend in a code review is paid back tenfold".

Beyond catching errors, manual reviews foster mentorship and knowledge sharing within teams. Senior developers can use the review process to explain their reasoning and share insights with junior members, strengthening team alignment and promoting a culture of ongoing learning. According to Stack Overflow's 2023 developer survey, developers continue to place high value on human judgment for learning and ensuring maintainability over time.

To maximize the impact of manual reviews, focus on high-priority areas like authentication, payment systems, and encryption. Keeping pull requests small helps prevent fatigue and allows for more thorough analysis. Additionally, rotating reviewers ensures fresh perspectives and reduces familiarity bias, making it easier to catch issues that automated tools might miss.

Detailed Comparison Across Key Factors

AI reviews and manual reviews take fundamentally different approaches to evaluating code, which impacts their depth, speed, and focus. AI scans entire repositories in one go, while manual reviews meticulously examine code line-by-line. Developers performing manual reviews focus on specific changes in a Pull Request, ensuring they align with business needs. While manual reviews can take hours or even days, AI tools can process over 100,000 lines of code in mere seconds. These differences highlight how each method brings distinct advantages in areas like security, scope, and feedback.

When it comes to security, AI relies on pattern recognition to identify known vulnerabilities, such as SQL injection, XSS, or hardcoded secrets, using predefined rules. On the other hand, manual reviews use threat modeling, where developers simulate potential attacks and assess business logic for flaws. This approach helps catch issues that AI might miss, like payment systems allowing negative values. As OWASP explains, "Automated scanning can't find every issue like XSS flaws, hence manual code reviews are important". These complementary strengths make both methods valuable in the development process.

The scope of analysis further separates these two approaches. Manual reviews concentrate on how new code integrates with the existing project architecture, ensuring smooth functionality. Meanwhile, AI casts a wider net, scanning historical commits, third-party dependencies, and identifying repository-wide issues. AI's consistency stands out - it applies the same rules across the board without fatigue. In contrast, the quality of manual reviews can vary depending on the reviewer’s expertise, mood, and focus.

Integration and feedback timing also differ significantly. AI tools integrate seamlessly with IDEs and CI/CD pipelines, providing real-time feedback as developers write code. This "AI-first" approach is gaining traction, with 45% of organizations now using AI-driven reviews to speed up deployments. Manual reviews, however, take place during the Pull Request stage, serving as a final checkpoint. While this ensures a thorough review, it can slow things down if reviewers are unavailable. These differences in integration shape how each method contributes to the workflow.

Interestingly, research shows that adding AI-generated comments to a Pull Request can increase the average closure time from 5 hours 52 minutes to 8 hours 20 minutes. This suggests that while AI provides speed and consistency, human reviewers bring essential context and accountability. As Jon Wiggins, a Machine Learning Engineer, explains:

"I tend to think that if an AI agent writes code, it's on me to clean it up before my name shows up in git blame".

These findings highlight the value of combining AI efficiency with human judgment to achieve the highest code quality.

When to Choose AI Code Review or Manual Review

Deciding between AI and manual code review can significantly affect your team's workflow and the overall quality of your codebase. The key is understanding their strengths and when to leverage each. AI is ideal for tackling routine, low-risk issues. It shines in quickly identifying syntax errors, enforcing style guidelines, and catching common code smells like unused variables. AI is also highly effective at scanning for known security vulnerabilities, such as those listed in the OWASP Top 10, including SQL injection, Cross-Site Scripting (XSS), and hardcoded secrets. With the growing reliance on AI in the industry, it's clear that it handles these straightforward tasks efficiently, allowing your team to focus on more complex challenges.

On the other hand, manual reviews are indispensable for scenarios requiring context, judgment, and deep expertise. Tasks like evaluating architectural decisions, analyzing intricate business logic, and reviewing high-stakes security components - such as authentication, payment systems, and encryption - demand human insight. For instance, AI might flag potential issues, but it cannot determine if your payment system handles edge cases correctly or if a new feature aligns with long-term project goals. This is where professional accountability and domain knowledge become critical, ensuring that high-risk areas are thoroughly vetted.

To maximize efficiency, consider integrating a fully-automated QA engineer into your CI/CD pipelines. Let AI handle routine checks first, freeing up human reviewers to focus on more nuanced, critical validation. Research indicates that teams using AI-driven code review agents experience 89% faster merges and 34% fewer regressions when the tools are properly implemented.

A practical approach is to address AI-identified issues first, then assign manual reviews to critical areas like authorization logic, core business algorithms, and complex integrations involving multiple services. Be mindful of how you configure AI tools - filtering for only critical issues can help avoid alert fatigue. Using a test case prioritization tool can further help teams focus on high-impact areas first. An empirical study revealed that unfiltered AI comments increased the average Pull Request closure time from 5 hours 52 minutes to 8 hours 20 minutes. For new features with undefined boundaries or code handling Personally Identifiable Information (PII), human oversight is non-negotiable. After all, AI lacks the accountability and responsibility that humans bring to the table. If a flaw goes unnoticed and causes a production failure, the repercussions fall squarely on your team, not the AI. In high-risk scenarios, your team's expertise remains irreplaceable.

How to Combine AI and Manual Code Review

The best way to approach code review is to start with AI scans as part of your CI/CD pipeline. These scans catch mechanical issues like syntax errors, style inconsistencies, and common security vulnerabilities before the code even reaches a human reviewer. Automating this initial step saves time by eliminating the need for manual error-spotting, which can be tedious and repetitive. Once the AI completes its analysis, the next step is to address the flagged issues proactively.

After the AI scan, developers should resolve the identified issues right away. This includes fixing straightforward problems - those "quick wins" - before sending the code for a human review. Essentially, clean up any AI-detected issues before your name shows up in the git blame log. This process ensures that when a human reviewer steps in, their attention is directed toward evaluating the more intricate aspects of the code, such as its logic and architecture, rather than minor details.

It's also crucial to fine-tune your AI tools to avoid overwhelming developers with unnecessary alerts. Configure rule sets and sensitivity levels to align with your project's coding standards. Keep an eye on how often developers accept AI suggestions, as this can indicate whether the tool needs adjustment. For example, one study revealed that poorly configured AI feedback increased pull request closure times from about 5 hours and 52 minutes to 8 hours and 20 minutes. If suggestions are frequently ignored, it's a sign that the rules need refining.

Once the AI feedback is optimized, human reviewers should focus on critical areas that go beyond the AI's capabilities. Assign reviewers to evaluate sensitive components like authentication, payment systems, encryption, and overall architecture. Think of AI-generated suggestions as drafts - human reviewers must validate these changes to ensure they don't introduce subtle logic errors or conflict with specific business requirements.

To measure the effectiveness of this hybrid approach, track key metrics such as reductions in production bugs, time spent on code reviews, and developer satisfaction. Establish benchmarks before implementing AI and reassess after 3–6 months to determine the return on investment. Pay close attention to the false positive rate - advanced AI tools can cut down false alerts by over 90% when configured correctly. Use developer feedback to continuously improve the system - if an AI suggestion is marked as incorrect, leverage that data to refine your rules and enhance accuracy over time.

Conclusion

AI and manual code reviews each bring unique strengths to the table, and combining them creates a powerful blend of efficiency and insight. AI offers unmatched speed and consistency, quickly scanning through over 100,000 lines of code to identify syntax errors, style issues, and known security vulnerabilities. Meanwhile, manual reviews provide the critical context and judgment needed to assess business logic, architectural choices, and overall code readability - areas where AI falls short.

Many organizations are embracing this hybrid approach to speed up development without compromising quality. AI serves as the first layer of defense, handling routine issues so human reviewers can focus on high-impact areas like authentication systems, payment workflows, architecture, and mentoring junior developers. This combination creates a streamlined review process that enhances both speed and quality.

As JetBrains aptly puts it:

"Automation accelerates you, but people give you perspective. The best pipelines have both."

To make the most of this synergy, start with automated scans in your CI/CD pipeline to catch basic errors early. Developers can address these flagged issues before the code reaches human reviewers, who then focus on more nuanced, context-specific problems. This layered approach can shrink feature release timelines by as much as 30%, all while preserving the architectural soundness and business alignment that only human expertise can ensure.

AI shines in its ability to scale, while humans bring the nuanced judgment needed for complex decisions. Together, they create a balanced system where speed, scalability, and human insight come together to deliver better, faster code. By leveraging both, teams can build a robust and efficient code review process.

FAQs

How does combining AI and human review improve code review efficiency?

A hybrid approach to code review combines the speed and automation of AI with the insight and contextual understanding of human expertise. AI handles repetitive tasks like spotting syntax errors, dependency issues, and common security flaws, saving valuable time. On the other hand, human reviewers tackle what AI can't - evaluating architectural choices, understanding design goals, and addressing complex security concerns.

By automating the initial review process, teams can reduce context-switching and quickly identify critical problems, significantly shortening review cycles. Human involvement ensures precision, provides specialized knowledge, and creates opportunities for mentoring junior developers. This blend not only boosts code quality but also helps teams deliver features faster and more effectively.

A great example of this approach is Ranger, which integrates AI-powered QA testing with human validation. This ensures accurate results, shortens testing timelines, and maintains top-tier software quality - all while enhancing productivity.

What challenges does AI face in code reviews?

AI-driven code reviews do a solid job catching basic syntax errors and ensuring general style consistency. However, they often fall short when it comes to tackling context-specific challenges. These tools don’t fully grasp your project’s architecture, business logic, or evolving design standards. As a result, they can overlook critical bugs or offer suggestions that don’t align with your team’s unique workflows.

Another challenge for AI tools is managing large, complex pull requests. Unlike human reviewers, who develop a knack for spotting subtle, high-impact issues, AI can miss these finer details. Sometimes, it might even provide overly confident but incorrect suggestions, thanks to limitations in its training data or difficulty interpreting nuanced scenarios.

That’s where Ranger steps in. By blending AI-powered QA testing with human oversight, Ranger bridges the gap. It automates test creation, seamlessly integrates with platforms like GitHub and Slack, and ensures teams get the perfect balance of speed and accuracy. It’s the best of both worlds.

When should you choose manual code reviews over AI-driven reviews?

Manual reviews shine in situations that demand a deep understanding of context, like evaluating intricate business logic, architectural choices, or security-critical code. Humans bring a unique ability to assess design principles, code readability, and the subtle intent behind decisions - areas where AI tools might miss the mark. For instance, when dealing with large AI-generated code changes, human judgment is crucial for spotting potential logic errors or compliance concerns.

Beyond quality control, manual reviews are invaluable for knowledge sharing and mentorship. Senior developers can guide their teams by providing feedback on best practices, documenting critical assumptions, and identifying design-level issues. In high-stakes scenarios - such as high-risk releases, regulatory environments, or performance-sensitive projects - the dependability of human oversight far outweighs the speed offered by automation.

That said, a hybrid approach often delivers the most effective results. Tools like Ranger demonstrate this by blending AI's efficiency with human expertise, ensuring that nuanced issues are addressed and outcomes remain accurate.

%201.svg)

.avif)

%201%20(1).svg)