How CI/CD Testing Impacts Engineering Velocity

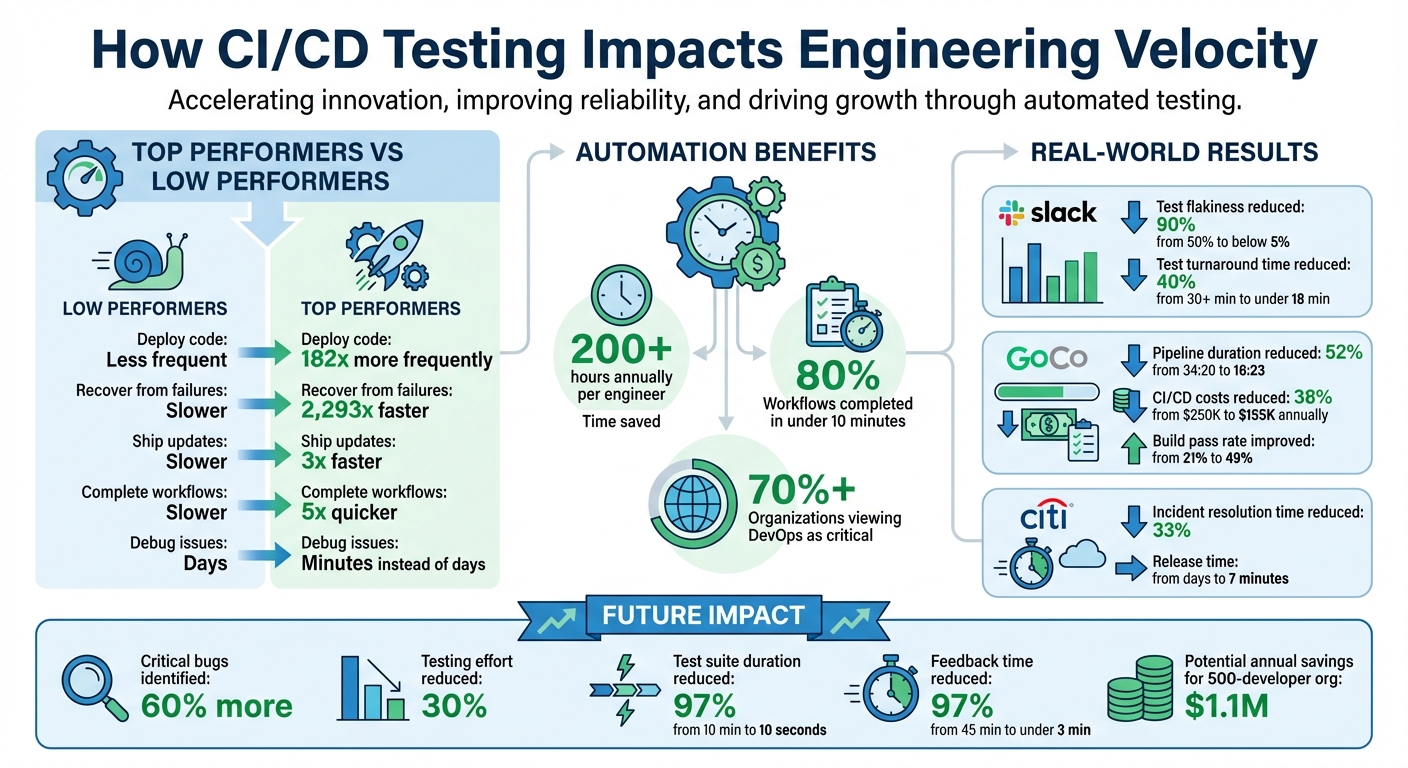

CI/CD testing speeds up software delivery while reducing bugs and downtime. Top-performing teams deploy code 182x more often and recover from failures 2,293x faster than low-performing teams. Here's how CI/CD testing improves engineering velocity:

- Key Metrics: Deployment frequency, lead time for changes, change failure rate, and mean time to recovery (MTTR) measure delivery efficiency.

- Automation Benefits: Saves 200+ hours annually per engineer, reduces test flakiness, and accelerates feedback loops.

- Case Studies: Slack cut test flakiness by 90% and reduced test times by 40%. GoCo halved pipeline durations and saved 38% in CI/CD costs.

- Future Trends: AI tools predict failures, automate testing, and shorten feedback cycles, saving time and boosting productivity.

Efficient CI/CD testing eliminates bottlenecks, enabling faster releases without sacrificing quality.

CI/CD Testing Impact on Engineering Velocity: Key Performance Metrics

Research Findings: How CI/CD Testing Affects Velocity

Performance Data from High-Performing Teams

Top-performing teams are shipping updates three times faster than their lower-performing counterparts. They also complete critical workflows at a pace that's five times quicker, which translates to saving millions of dollars annually in development costs.

CircleCI's data shows that a striking 80% of workflows on its platform wrap up in under 10 minutes. This rapid feedback loop keeps developers in the zone, maintaining their momentum. As Rob Zuber, CTO of CircleCI, puts it:

"The top performing teams represented in this year's report clearly see being great at software delivery as a strategic advantage".

But it’s not just about speed. High-performing teams can debug issues in minutes instead of days, reclaiming thousands of hours each year for innovation. By leveraging optimized CI/CD platforms, these teams cut the time from build to delivery by three times the industry average. Unsurprisingly, over 70% of organizations now view their DevOps strategies as critical to driving significant business value, underscoring the growing importance of software delivery performance in staying competitive.

These numbers highlight how automation plays a pivotal role in speeding up the development process.

How Automation Increases Velocity

Automation is a game-changer, saving engineers over 200 hours annually. With this extra time, developers can focus on building new features instead of slogging through repetitive test runs.

By adopting shift-left testing, teams catch bugs earlier in the development process, minimizing the time and effort needed for fixes. Automated tests - whether unit, integration, or end-to-end - immediately verify code after each commit, allowing developers to resolve issues before they escalate. Continuous delivery further ensures that code is always deployment-ready, thanks to automated checks in staging environments.

These benefits aren’t just theoretical. They’re backed by real-world testimonials:

"Ranger has an innovative approach to testing that allows our team to get the benefits of E2E testing with a fraction of the effort they usually require." – Brandon Goren, Software Engineer at Clay

"They make it easy to keep quality high while maintaining high engineering velocity. We are always adding new features, and Ranger has them covered in the blink of an eye." – Martin Camacho, Co-Founder of Suno

The Cost of Poor Testing Practices

Inefficient testing can wreak havoc on development workflows. Flaky and slow tests erode trust, block pipelines, and delay releases. Legacy systems and poorly designed tests create bottlenecks, leading to resource contention and sluggish execution. Overloaded pipelines with too many code changes slow builds, while misconfigured pipelines can skip compliance steps or expose sensitive information like hardcoded credentials - risks that could result in hefty regulatory penalties.

To avoid these pitfalls, teams need proper tools for observability and maintenance. Tools that identify and address unstable tests help maintain pipeline speed and developer confidence. Impact analysis tools that automatically skip irrelevant tests can also significantly reduce execution times. Without these measures, testing can quickly turn from a productivity booster into a major bottleneck.

Case Studies: Companies That Improved Velocity with CI/CD Testing

Case Study: Slack's Faster Test Turnaround with Automated Testing

In 2021, Slack encountered a major bottleneck in their Webapp monorepo. Developers were stuck waiting up to 30 minutes for test results, and about 50% of pull requests suffered from flakiness - tests failing randomly without actual code issues. To tackle this, Slack engineers revamped their testing workflow. Instead of running all 40,000 tests before every merge, they adopted a tiered testing approach. Under this system, less than 1% of tests - focused on critical functionality - ran pre-merge, around 10% ran post-merge to block deployments, and the remaining tests were executed in batch regression pipelines.

By integrating Cypress.io for test execution and building a custom Slack bot to deliver immediate post-merge alerts, they achieved impressive results. Slack cut its p95 test turnaround time by over 40%, reducing it from more than 30 minutes to under 18 minutes consistently. They also slashed test flakiness from 50% to below 5%. This streamlined testing process gave developers the confidence to merge code more efficiently, keeping development on track and avoiding unnecessary delays.

Case Study: GoCo's 52% Pipeline Duration Reduction with Unlimited Parallelization

GoCo, an HR software company, faced significant challenges with their CI/CD pipeline in 2024. Their pipeline was constrained by a 50-container parallelization cap, which caused pipeline runs to drag on for 34 minutes and 20 seconds. To solve this, Co-founder and CTO Jason Wang spearheaded a migration to a platform that offered unlimited parallelization. By removing the container limit, GoCo was able to run large test suites concurrently without delays caused by queuing.

The results were dramatic: pipeline duration dropped by 52%, going from 34 minutes and 20 seconds to just 16 minutes and 23 seconds. Additionally, GoCo reduced annual CI/CD costs by 38%, cutting expenses from $250,000 to $155,000. Build pass rates also improved significantly, jumping from 21% to 49%. These improvements not only saved time and money but also enabled faster feature rollouts and boosted engineering productivity.

Case Study: Citi's 33% Faster Incident Resolution

Citi revolutionized its software delivery process for its 20,000 engineers by adopting automated continuous delivery across the organization. Before this transformation, code releases required days of manual coordination and approvals. With the implementation of Harness Continuous Delivery, Citi reduced release times to just seven minutes, allowing for much quicker incident resolution.

This shift had a tangible impact: the mean time to resolution for production incidents dropped by about 33%. By eliminating manual steps in the release process, engineers were empowered to deploy fixes faster, ensuring smoother operations and minimizing downtime.

These examples demonstrate how smarter CI/CD testing practices - like automation, parallelization, and real-time monitoring - can drastically improve development velocity. They set the stage for further advancements in monitoring and scaling within CI/CD testing.

How to scale automated testing beyond CI/CD pipelines

sbb-itb-7ae2cb2

How to Monitor and Scale CI/CD Testing Effectively

Following the case studies on improved velocity, let’s explore practical ways to monitor and scale CI/CD testing efficiently.

Using Test Parallelization

Running tests simultaneously across multiple machines or environments is one of the quickest ways to reduce pipeline time. Parallel test execution spreads the workload across different infrastructure, speeding up feedback loops and eliminating long waits for developers. For example, internal testing has shown that using intelligent test selection and dynamic splitting can shrink test suite durations by 97% - from 10 minutes to just 10 seconds.

The secret to effective parallelization lies in ensuring that tests are both stateless and independent. Each test should run without interfering with others or relying on shared data. Isolated environments for every test run are crucial to prevent configuration drift and to ensure every build starts fresh. On top of that, keeping an eye on test failures and resource usage ensures these improvements consistently benefit the pipeline.

Tracking Test Failures and Resource Usage

Real-time monitoring of test execution metrics, failure trends, and resource consumption is essential for identifying flaky tests and infrastructure bottlenecks before they disrupt development. Flaky tests - those that fail inconsistently due to timeouts or external dependencies - can erode confidence in the pipeline and waste valuable engineering time. By tracking these failures, teams can isolate problematic tests and address them quickly.

Monitoring resource usage is equally important. Using dynamic resource allocation with cloud-based or containerized environments ensures infrastructure scales appropriately, avoiding bottlenecks caused by under-provisioning while keeping idle costs low. Caching frequently used dependencies and build artifacts between runs can also cut down on redundant operations, saving time and money. With long build interruptions costing developers over 20 minutes of productivity per instance, maintaining fast, dependable pipelines directly supports engineering efficiency. Building on these practices, AI brings even more opportunities to enhance test scalability and reliability.

Using AI for Smarter Testing

AI-powered QA tools are reshaping how teams create, maintain, and scale their tests. Platforms like Ranger leverage AI agents to navigate websites and automatically generate test scripts, dramatically reducing the manual effort needed to build and maintain tests. These tools also automate the triage of test failures, distinguishing real bugs from false alarms so engineers can focus on critical issues. Teams using AI-powered QA tools have reported saving over 200 hours per engineer annually on testing tasks.

Ranger adopts a hybrid approach, combining AI-driven generation with human oversight - a "cyborg" model that ensures test code remains both readable and reliable. The platform integrates seamlessly with tools like GitHub and Slack, running tests on every code change and delivering real-time alerts when issues arise. This end-to-end coverage scales effortlessly with your team's output, all without adding extra DevOps overhead.

"Smarter Testing has helped our team meaningfully shorten the cycle time between development, QA, and release. By automatically prioritizing the tests that matter most to our current work, we're able to move faster without sacrificing stability or coverage." - Ben Horne, Director of Engineering, FORM

Future Trends in CI/CD Test Optimization

The landscape of CI/CD testing is rapidly advancing, with systems becoming smarter, capable of predicting failures and adapting on the fly. These changes aim to boost engineering efficiency while cutting down on manual tasks.

AI-Driven Predictive Analytics

Predictive analytics is taking automated testing to the next level, shifting CI/CD pipelines from simply detecting bugs to actively preventing them. By analyzing factors like code churn, feature dependencies, and commit history, AI can intelligently select and prioritize tests. This proactive approach has proven effective - organizations using predictive models report identifying 60% more critical bugs while reducing testing efforts by 30%.

One of the most exciting developments is the ability to correlate test failures with business outcomes. Future predictive tools will help teams prioritize fixes based on their impact on revenue and customer retention, rather than just technical severity. These models can also predict release readiness with 95% accuracy, and about 60% of production issues can now be anticipated using existing test data that traditional methods often overlook. As Jun Yu Tan, Founding Engineer at Tusk, explains:

"Predictive CI represents a paradigm shift from reactive QA to proactive quality engineering, a systematic approach to anticipating problems".

Agentic AI for Continuous Resilience Testing

Autonomous AI agents are stepping in to handle time-consuming maintenance tasks, like fixing flaky tests, updating dependencies, and resolving configuration conflicts. For example, during CircleCI's private beta of "Chunk", an autonomous CI agent was able to analyze and open pull requests to fix 90% of identified flaky tests, ensuring stable builds with minimal human intervention.

These AI agents extend their capabilities beyond test execution. They can navigate websites, generate readable test scripts (such as Playwright scripts), and even triage failures to separate genuine bugs from irrelevant noise. A notable example is OpenAI's collaboration with Ranger to develop a web browsing harness for the o3-mini model. This project demonstrated agentic features, enabling the model to interact across various web environments while maintaining high-quality results for their research. By blending AI automation with human oversight, teams can achieve sustainable improvements in resilience.

Adopting Continuous Improvement

The future of CI/CD lies with teams that view their pipelines as evolving systems, constantly learning and adapting. Validation is moving from being a post-development step to a parallel process that runs at machine speed, often completing checks by the time a pull request is submitted. Context-aware testing is also dramatically reducing feedback times, cutting them from 45 minutes to under 3 minutes.

AI serves as an amplifier for existing strengths. As Ron Powell from CircleCI puts it:

"AI doesn't create organizational excellence; it amplifies what already exists. For teams with solid foundations, AI is a force multiplier. For teams with broken processes and dysfunctional systems, AI magnifies the chaos".

To get started, teams should focus on using AI to prioritize their current test suites before advancing to fully autonomous test generation. Maintaining high-quality data is crucial, as AI accuracy depends on clean and well-organized datasets. For example, in a 500-developer organization, reducing workflow times by just 10 minutes could lead to annual savings of $1.1 million.

These advancements are paving the way for a future where continuous optimization fuels faster and more efficient engineering processes.

Conclusion

Streamlined CI/CD testing eliminates bottlenecks, speeds up feedback loops, and accelerates releases - all while maintaining high-quality standards. According to data, teams that rely on heavily automated pipelines are 1.5 times more likely to double their deployment speed compared to those sticking with manual workflows. Slack's transformation offers a clear example of how strategic changes can lead to measurable success.

Automation isn't the only factor - continuous monitoring and smart scaling are critical for keeping pipelines efficient. Take Samsara, for instance: they managed to maintain 15-minute CI speeds despite a 500% increase in tests by using selective testing and parallelization. Meanwhile, autonomous validation tools have cut feedback time by 97% and reduced failure recovery time by 50%. These improvements translate directly into productivity gains. For a 50-person engineering team, optimizing CI/CD can reclaim around 93,500 minutes of engineering time every month - that’s roughly 1.5 extra hours of productivity per developer each day.

CircleCI highlights how autonomous validation is reshaping the economics of software delivery. Modern platforms like Ranger combine AI-driven test creation with human oversight, integrating seamlessly with tools like Slack and GitHub. This approach not only identifies real bugs faster but also automates maintenance, setting the stage for smarter and more efficient CI/CD practices.

FAQs

How does CI/CD testing improve engineering speed and efficiency?

CI/CD testing speeds up engineering workflows by automating the build and test processes. This automation helps teams catch issues early, release updates more often, and focus on creating new features rather than troubleshooting errors. Automated tests also ensure smoother workflows, fewer bugs, and quicker feedback loops.

When done right, CI/CD testing can boost delivery speed by up to 40% and save individual engineers more than 200 hours each year. The result? Faster development cycles and more reliable products, allowing teams to deliver high-quality software at a quicker pace.

How does AI improve CI/CD testing and boost engineering efficiency?

AI brings a transformative edge to CI/CD testing by taking over repetitive and error-prone tasks, slashing testing time while boosting precision. Through automated test generation and upkeep, teams get quicker feedback, identify bugs earlier, and roll out features faster. The outcome? Better software quality and fewer headaches after release.

Another game-changer is self-healing test scripts. These scripts adjust automatically to changes, cutting down the need for constant maintenance. With AI’s advanced root-cause analysis, bugs are spotted and resolved faster, while critical tests are prioritized for tighter feedback loops and broader test coverage. These improvements don’t just save time - engineering teams gain back hundreds of hours each year and reduce testing costs, paving the way for faster, scalable delivery of top-notch software.

How does monitoring and scaling CI/CD testing improve engineering efficiency?

Effective CI/CD testing is all about improving engineering workflows by emphasizing visibility and scalability. Keeping an eye on key metrics - like test pass rates, flakiness, and mean time to detect (MTTD) - helps teams quickly spot and address issues. With AI-powered tools in the mix, teams can zero in on root causes faster, cut down defect resolution times, and catch flaky tests before they derail a release. Real-time alerts integrated into platforms like Slack or GitHub ensure developers stay informed without the hassle of constant context-switching.

When it comes to scaling testing, adopting parallel, container-based test execution is a game-changer. By tapping into cloud resources, teams can achieve faster test results. Self-healing test scripts further streamline the process by adapting to UI changes, slashing maintenance time. Focusing on critical test paths is another must - it ensures feedback loops stay under 10 minutes, which is essential for keeping developers productive. Pairing these practices with approaches like gated rollouts and incremental deployments ensures both speed and reliability in the delivery pipeline.

%201.svg)

.avif)

%201%20(1).svg)