AI-Driven Resource Allocation in QA Testing

AI is transforming QA testing by tackling inefficiencies in resource allocation. Here's how:

- Manual QA challenges: Over 30% of testing resources are wasted on low-priority tasks, while 43% of team members face imbalanced workloads. Critical bugs still make it to production in 42% of cases, costing mid-sized companies an average of $2.3 million annually.

- AI solutions: AI predicts high-risk areas, prioritizes test cases, and automates script updates. It also balances workloads in real time, reducing bottlenecks and improving efficiency.

- Key benefits: Teams save time and costs by running fewer but more relevant tests. AI-driven methods improve test coverage by up to 85%, reduce issue detection time by 90%, and boost productivity by up to 40%.

- Ranger's approach: By combining AI automation with human oversight, Ranger ensures reliable testing, faster releases, and reduced maintenance burdens.

AI-powered tools like Ranger help QA teams focus on impactful tasks, reduce errors, and maintain high-quality standards in fast-paced development environments.

Problems with Manual QA Resource Allocation

Manual Task Assignment Leads to Wasted Resources

When QA managers rely on manual methods to assign testing tasks, it often leads to inefficiencies. Around 43% of team members find themselves idle, while others are overwhelmed with too much work. This imbalance isn't just unfair - it’s costly. Teams lose valuable hours because they lack a real-time view of task distribution and workloads.

On top of that, memory limitations make tracking even harder. Testers can forget up to 90% of new information in a single week. This means important details about bugs, test results, or edge cases can easily slip through the cracks. For example, a critical defect flagged last week might be forgotten today, leading to inconsistent bug management and prioritization.

Manual processes also encourage a narrow focus on immediate risks. Teams tend to prioritize visible issues - like bugs in newly launched features - while overlooking smaller problems that could escalate over time. Without data-driven insights, it’s nearly impossible to predict which minor issue could lead to a major failure months later. As codebases expand, these inefficiencies only grow, putting even more strain on manual resource allocation.

Scaling Problems with Larger Codebases

As software applications grow, the workload required to maintain thorough test coverage becomes overwhelming. Two-thirds of companies test less than half of their user workflows because manual testing simply can’t keep up. Writing test scripts for every possible scenario demands resources that many teams don’t have, leaving critical functionalities untested.

The problem gets worse as the codebase expands. For every four front-end developers, teams typically need one test automation engineer just to maintain test suites. On a team of 20 developers, that means dedicating five full-time engineers solely to maintaining tests - without accounting for new ones. For most organizations, this setup is financially unsustainable, forcing teams to choose between proper testing and meeting release deadlines.

And as development accelerates, manual resource allocation becomes even less effective.

Unpredictable Resource Needs in CI/CD Pipelines

CI/CD pipelines expose the weaknesses of manual QA processes. In these environments, manual testing can consume up to 50% of project timelines, creating significant delays. The rapid pace of code changes - known as "code churn" - means test scripts frequently break, and manual methods struggle to keep up with the constant updates.

The unpredictable nature of CI/CD pipelines compounds the problem. Some weeks require minimal QA effort, while others demand an all-hands-on-deck approach. Manual allocation can’t adjust quickly enough to these shifts, leaving teams either overstaffed during slow periods or scrambling to meet the demands of high-pressure deadlines. This unpredictability makes it nearly impossible to plan resources or budgets effectively for QA operations.

How AI Improves Resource Allocation

Risk-Based Test Prioritization

AI has changed the game for how teams decide which tests to run. By analyzing historical defect data, code complexity, and developer activity, AI can pinpoint potential issues with remarkable precision. For example, it’s possible to identify 50% of failures by running just 0.2% of tests. This is achieved through machine learning algorithms that assign real-time risk scores to tests based on code analysis.

The impact is huge: integrating AI into risk-based test prioritization can slash testing costs by 30% and cut execution time by 70%. This means teams save valuable development hours that can be redirected to other critical tasks. In some cases, such implementations have even led to a 20% boost in conversion rates.

One of the standout benefits is how AI eliminates inefficiency. By identifying redundant or low-priority test cases, AI removes them from the execution queue. This clears up infrastructure and allows teams to focus on high-value work that truly matters.

"AI improves efficiency, but humans bring business context, domain expertise, and real-world judgment to ensure intelligent test prioritization is accurate and effective" - Janakiraman Jayachandran, Global Head of Testing at Aspire Systems

These risk-based methods also pave the way for better resource forecasting, ensuring teams can plan more effectively.

Predictive Resource Forecasting

Unpredictability often disrupts CI/CD pipelines, but predictive forecasting powered by AI offers a solution. By analyzing code changes and historical data, AI predicts which tests are relevant for each commit. This avoids overwhelming pipelines with unnecessary tests. For instance, instead of running a full suite of 50,000 tests that would take six hours, AI can narrow it down to 3,000 relevant tests that complete in under 30 minutes - all while still catching most defects.

AI doesn’t stop there. It continuously monitors workloads and predicts future needs to balance resources and avoid bottlenecks. This approach can boost team productivity and performance by up to 40%, addressing a common issue where 43% of team members feel underutilized while others are overloaded.

By focusing on relevant tests, AI reduces computational resource usage, enabling more frequent testing without driving up infrastructure costs. For mid-sized companies, this is a game-changer, as delayed feedback loops in testing can rack up an average of $2.3 million annually in rework costs.

As predictive forecasting optimizes resource allocation, the addition of self-healing test scripts further minimizes manual intervention in testing environments.

Self-Healing Test Scripts and Maintenance

AI also takes the hassle out of test maintenance through self-healing frameworks. These frameworks automatically detect, diagnose, and repair broken tests, a crucial feature for handling frequent changes in application interfaces. For example, when the attributes or paths of a UI element change, dynamic locator identification ensures the testing framework can still find the element, preventing test failures without requiring human input.

Self-healing technology does more than just repair broken tests. It uses intelligent waiting mechanisms that adapt to application response times, significantly reducing flaky tests and eliminating the need for manual fine-tuning. On top of that, anomaly detection flags unexpected UI changes and allows the system to address issues autonomously.

The result? A major reduction in the maintenance burden. Organizations using self-healing frameworks report noticeable decreases in both maintenance time and overall testing costs. By automating script repairs, teams can adopt more resilient testing strategies and speed up release cycles. This also frees up time for human testers to focus on exploratory testing and strategic quality assurance efforts, adding more value to the development process.

How QA Teams Scale Test Automation with AI

sbb-itb-7ae2cb2

Ranger's Approach to AI-Driven QA Testing

Ranger takes QA testing to the next level by blending AI's capabilities with the precision of human expertise. This hybrid approach ensures efficient, reliable, and scalable testing for modern engineering teams.

AI-Powered Test Creation and Maintenance

Ranger's hybrid QA model leverages AI to generate Playwright tests automatically through adaptive web agents. However, the process doesn’t stop there - human QA experts review every AI-generated test to ensure the code is clear, dependable, and up to the mark. This method tackles a common pain point in automated testing: brittle scripts that break whenever the UI changes.

The platform also simplifies test maintenance by automatically filtering out flaky tests and irrelevant noise. This allows engineering teams to concentrate on uncovering real bugs and addressing high-priority issues. Additionally, Ranger, in collaboration with OpenAI, has developed a web browsing harness that taps into the o3-mini model's dynamic capabilities across diverse web environments.

"Ranger has an innovative approach to testing that allows our team to get the benefits of E2E testing with a fraction of the effort they usually require."

- Brandon Goren, Software Engineer, Clay

Real-Time Resource Allocation with Integrations

Ranger keeps teams in sync with real-time integrations for Slack and GitHub. When critical workflows fail, Slack notifications instantly alert the right team members, ensuring swift action. At the same time, the GitHub integration automates test suite execution as code changes occur, providing developers with immediate feedback directly on pull requests.

This setup eliminates the need for manual test triggering and reduces communication delays - issues that often slow QA processes. By running tests across staging and preview environments, Ranger helps teams catch potential bugs early, keeping up with the demands of fast-moving CI/CD pipelines.

"I definitely feel more confident releasing more frequently now than I did before Ranger. Now things are pretty confident on having things go out same day once test flows have run."

- Jonas Bauer, Co-Founder and Engineering Lead, Upside

Hosted Infrastructure and Scalability

Ranger doesn’t just streamline testing - it also simplifies infrastructure management. The platform provides fully hosted testing environments, spinning up browsers to run consistent tests without burdening internal DevOps teams. This means no more worrying about maintaining or scaling testing infrastructure as your codebase grows.

With Ranger’s automatic scaling, critical flows are continuously validated, freeing developers to focus on creating new features. For fast-paced teams, this translates to fewer operational headaches and more time spent on building.

"They make it easy to keep quality high while maintaining high engineering velocity. We are always adding new features, and Ranger has them covered swiftly."

- Martin Camacho, Co-Founder, Suno

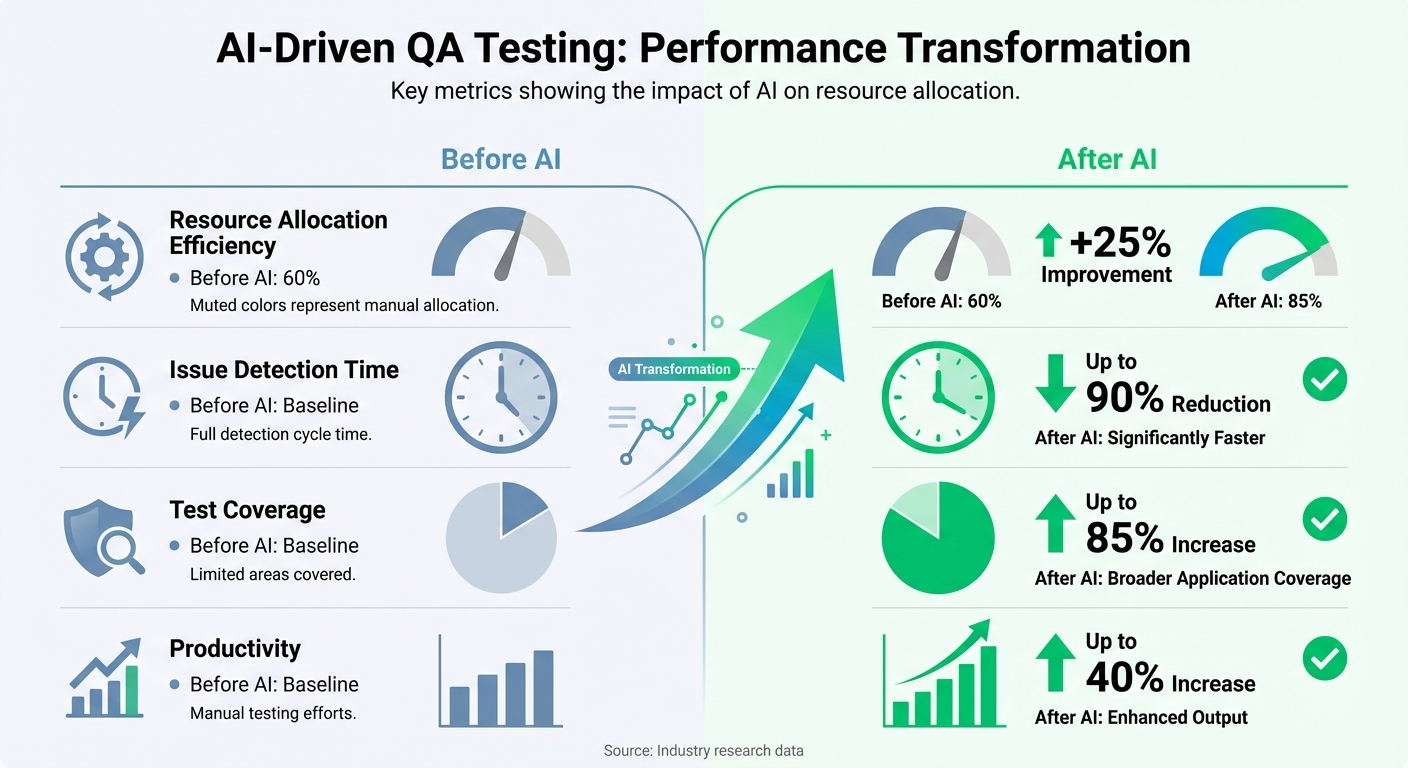

Results and Metrics from AI-Driven Resource Allocation

Before vs After AI in QA Testing: Key Performance Metrics

Key Performance Metrics

Switching to AI-driven resource allocation has brought noticeable improvements to QA testing. Efficiency in resource allocation jumps from 60% to 85%, allowing teams to execute significantly more tests. The time needed to detect issues is slashed by up to 90%, turning what used to take days into tasks completed in minutes. Additionally, test coverage expands by up to 85%, and manual effort is significantly reduced, leading to overall productivity gains ranging from 21% to 40%.

| Metric | Before AI | After AI | Improvement |

|---|---|---|---|

| Resource Allocation Efficiency | 60% | 85% | +25% |

| Issue Detection Time | Baseline | Up to 90% reduction | -90% |

| Test Coverage | Baseline | Up to 85% increase | +85% |

| Productivity | Baseline | Up to 40% increase | +40% |

Results with Ranger

These advancements become even more impactful when applied through Ranger's hybrid AI-human approach. By blending automation with expert oversight, Ranger enables engineering teams to meet the fast-paced demands of modern software delivery. The platform automates critical aspects like test creation, maintenance, and triage, while human experts focus on ensuring code quality. This combination allows teams to achieve comprehensive testing with far less effort than traditional methods.

The effect on release cycles is transformative. Teams report greater confidence in deploying updates more frequently, with test flows that support same-day deployment readiness. Ranger helps maintain high-quality standards even as teams accelerate their development cycles and introduce new features continuously.

Conclusion

AI-powered resource allocation is reshaping QA testing, turning it into a strategic advantage for software teams. By moving away from manual testing processes to intelligent automation, teams achieve improvements in efficiency, faster bug detection, and broader test coverage - all while cutting down on manual labor. These advancements fundamentally change how software teams operate, paving the way for smarter and more adaptive testing workflows.

While AI shines in automating repetitive tasks, targeting high-risk areas, and adjusting to changes with self-healing scripts, human involvement ensures that quality and reliability remain intact. This combination of AI and human oversight addresses the limitations of traditional manual testing, enabling QA teams to focus more on exploratory testing and strategic initiatives instead of managing fragile test scripts.

"I definitely feel more confident releasing more frequently now than I did before Ranger. Now things are pretty confident on having things go out same day once test flows have run." - Jonas Bauer, Co-Founder and Engineering Lead, Upside

As teams grapple with the challenges of scalability and unpredictability, AI-driven approaches provide a renewed sense of control and assurance. Tools like Ranger directly address inefficiencies in traditional QA by integrating seamlessly with platforms like Slack and GitHub, offering hosted infrastructure, and automating test creation - removing many of the roadblocks associated with manual QA.

Adopting AI-driven resource allocation today allows software teams to release updates faster, catch more bugs, and uphold higher quality standards. At the same time, it reduces costs and frees up engineers to concentrate on building the features that truly make a difference.

FAQs

How does AI make QA testing more efficient?

AI brings a new level of efficiency to QA testing by taking over repetitive tasks, anticipating potential failures, and automatically updating test scripts. This not only cuts down on the need for manual effort but also shortens testing cycles while delivering more precise results.

With AI handling the routine, teams can dedicate their energy to solving complex issues, which saves time and enhances the overall quality of the software. Plus, AI helps allocate resources more effectively, reducing waste and boosting productivity throughout the testing process.

What are the advantages of using self-healing test scripts in QA testing?

Self-healing test scripts come with several standout benefits for QA testing. One of their biggest perks is the ability to automatically adjust to software changes, which can slash manual script maintenance efforts by as much as 70%. That means less time spent fixing scripts and more time saved for other priorities.

These scripts also tackle common testing headaches like false positives and flaky tests, leading to more dependable and precise results. On top of that, they offer real-time error detection and diagnosis, boosting testing efficiency and helping teams concentrate on delivering top-notch software at a faster pace.

How does Ranger streamline QA testing in CI/CD pipelines?

Ranger fits effortlessly into CI/CD pipelines, streamlining the QA process. By automating tasks like creating tests and maintaining scripts, it cuts down on manual work and speeds up testing cycles. Its AI-driven approach prioritizes essential tests by analyzing factors like code changes and risk levels, helping teams catch bugs faster while making the best use of their resources.

With Ranger, software teams can boost testing accuracy, save valuable time, and concentrate on rolling out top-notch features faster - all without disrupting their collaborative development workflows.

%201.svg)

.avif)

%201%20(1).svg)