AI-Powered Regression Testing: Key Benefits

AI-powered regression testing is transforming software development by addressing the inefficiencies of manual testing. Traditional regression testing is slow, prone to human error, and difficult to scale, especially for large projects. AI offers a smarter approach by automating repetitive tasks, predicting high-risk areas, and running only the most relevant tests. This saves time, reduces costs, and improves accuracy.

Key highlights:

- Time Savings: AI focuses on critical tests, reducing test cycles by up to 70%.

- Improved Accuracy: Self-healing scripts adapt to code changes, minimizing flaky tests.

- Cost Reduction: Automating up to 80% of workflows can save enterprises 30–50% on QA budgets.

- Risk Prediction: AI analyzes code changes and historical data to identify potential failures early.

- Seamless Integration: Works with CI/CD pipelines to ensure faster releases and fewer disruptions.

AI-Powered Regression Testing: Key Statistics and Benefits

Episode 4 : AI for Regression Testing & Predictive Analysis | Challenges & Best Practices | AI | ML

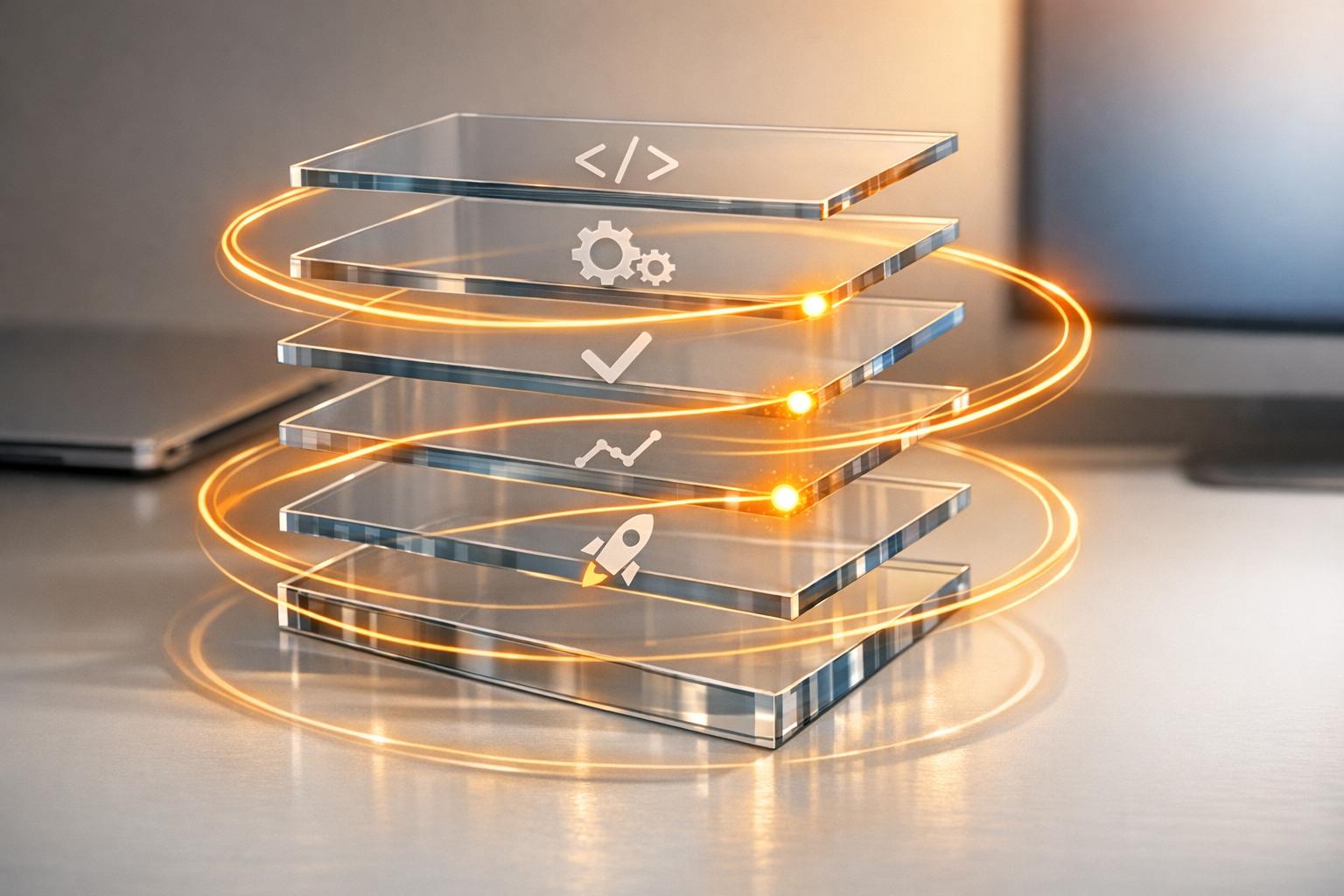

Problems with Manual Regression Testing

Before diving into how AI can improve regression testing, it's important to understand the challenges tied to manual approaches. Shockingly, only 3% of organizations fully integrate their regression suites into delivery pipelines. This leaves the majority stuck in slow, manual testing cycles, even for minor updates. These delays aren't just about the sheer number of tests - it’s also about the inherent flaws in manual workflows.

Manual Work and Human Error

Manual regression testing often relies heavily on the memory and experience of testers. This approach doesn't scale well. In fact, 67% of QA teams admit that up to half of their testing depends on testers without specialized roles. This reliance can lead to inconsistent results, as testers often base decisions on personal familiarity rather than data-driven insights.

Fatigue is another major issue. Repeatedly performing monotonous tasks can cause testers to miss subtle problems, like tiny layout glitches or hidden dependencies. This is one reason why 83% of tech professionals, including QA and development teams, report burnout caused by last-minute fixes and the stress of manual testing cycles. And the stakes are high - software failures have contributed to a staggering $1 trillion in global losses.

"Manually performing regression testing is a repetitive and monotonous task, leading to a decline in attention and motivation."

- Salman Khan, Test Automation Evangelist, TestMu AI

But human error isn't the only challenge. Scaling manual regression testing is an entirely different beast.

Difficulty Scaling Tests

As software projects grow, managing manual regression testing becomes a logistical nightmare. For instance, running a full manual regression suite on a large codebase can take up to 18 hours. This creates significant bottlenecks in CI/CD pipelines and delays releases. Without tools to intelligently prioritize tests, teams often end up running hundreds of tests - even for something as simple as a one-line code change.

On top of that, maintenance debt piles up fast. Small changes, like renaming a button or tweaking a component, can require extensive rewrites of test scripts. Manual data setup adds even more time - seeding databases or mocking APIs can take 20–40 minutes per test run. With tighter release schedules, static checklists often fail to catch high-risk areas, leaving critical business workflows exposed to potential failures.

How AI Improves Regression Testing

AI tackles the challenges of manual regression testing by running only the tests impacted by code changes, historical data, and risk patterns. Instead of executing an entire suite of 10,000 tests, AI might focus on 200 critical ones, saving time without sacrificing quality [5,10].

For example, Microsoft slashed regression testing time by 70% across Windows, Office, and Azure. Similarly, a major bank reduced its testing cycle from three weeks to just three days - an 85% improvement. This faster process accelerates both code validation and feature releases, leading to quicker feedback and more efficient development workflows.

Faster Test Cycles and Quicker Releases

AI speeds up testing through smarter test selection and parallel execution. By analyzing code changes, AI identifies which tests are most relevant, especially in historically fragile areas. This risk-based focus ensures developers receive feedback in minutes rather than hours [5,6].

Additionally, self-healing scripts adapt to UI changes automatically. For instance, if a button label changes from "Submit" to "Confirm", the script updates itself, avoiding test failures [2,5]. These dynamic adjustments keep CI/CD pipelines running smoothly by reducing flaky tests and minimizing disruptions.

Better Test Coverage and Accuracy

Beyond speed, AI improves test coverage and uncovers gaps that manual methods often miss. By analyzing historical data and usage patterns, AI identifies workflows that lack sufficient testing and suggests new test cases. For example, the AI tool Cleverest found bugs in 50% of bug-introducing commits and reproduced 66% of patched bugs in XML and JavaScript programs within three minutes.

Visual AI further enhances accuracy in UI regression testing by detecting meaningful layout and design changes. Unlike pixel-based comparisons, it identifies issues such as misaligned elements or broken responsive designs [5,10]. AI-generated test cases are also highly distinct, with only 10% similarity to existing ones, as they are built from the underlying logic of the code.

Lower Costs and Better Resource Use

AI significantly lowers costs by automating repetitive tasks, allowing QA teams to focus on high-value testing. QA and testing typically consume 25–40% of IT budgets in large enterprises. Automating up to 80% of testing workflows with AI can drastically cut these expenses. For instance, one global e-commerce company reduced testing costs by 60%, improved checkout uptime from 94% to 99.9%, and saved an estimated $30 million annually.

AI also changes how QA teams allocate their time. Instead of spending hours updating broken scripts or preparing test data, teams can focus on strategic tasks like exploratory testing and solving complex edge cases. AI takes care of the repetitive work - generating realistic test data, updating scripts for UI changes, and capturing organizational knowledge - freeing up QA professionals to guide AI systems and tackle challenges that require human intuition [1,2,10].

AI Predictions and Risk Reduction

Thanks to faster testing cycles and broader test coverage, AI now takes a proactive role in predicting risks to prevent failures. Instead of waiting to troubleshoot issues after they appear, AI uses machine learning to analyze historical defect data, test results, and application logs. This allows it to identify high-risk areas before testing even starts, ensuring that the most critical test cases are prioritized. This approach amplifies the effectiveness of automated regression testing.

Predicting Defects Before They Occur

AI goes a step further by analyzing code diffs - essentially, the changes between current and previous versions of code. These insights help pinpoint where bugs are most likely to show up during a commit or pull request. AI also uses commit messages and code changes to create tests specifically designed to predict and reveal defects.

A January 2025 study on Cleverest showcased just how efficient this can be. According to the research, Cleverest was able to generate bug-revealing tests in under three minutes. It detected defects in 50% of bug-introducing commits and reproduced 66% of patched bugs.

"For programs using more human-readable file formats, like XML or JavaScript, we found Cleverest performed very well. It generated easy-to-understand bug-revealing or bug-reproduction test cases for the majority of commits in just under three minutes." - Jing Liu et al.

These capabilities pave the way for real-time alerts, ensuring teams stay informed at every critical step.

Real-Time Risk Alerts

In addition to predicting defects, AI generates real-time risk alerts that immediately flag high-risk code changes. These alerts guide teams to focus on the areas that need the most attention. By analyzing execution results, unresolved issues, and past failure patterns, AI can signal whether a release is ready or if more testing is required. To avoid unnecessary noise, it suppresses non-critical alerts, highlighting only meaningful regressions.

This smarter prioritization has tangible benefits. AI-driven QA can reduce the size of regression test suites by 30–40% while still maintaining full coverage. Enterprises have reported cost savings of 30–50% due to reduced maintenance needs and faster test execution. By clustering similar errors, AI also speeds up root-cause analysis, enabling developers to resolve issues more quickly. This targeted approach ensures that critical user flows are verified first, with alerts focused on major functionality rather than minor glitches.

sbb-itb-7ae2cb2

Scaling Tests and Reducing Maintenance

AI isn't just about speed and precision - it also simplifies test maintenance as applications grow and evolve. As software becomes more complex and development teams push updates faster, traditional test suites often become a headache. Static scripts tend to break whenever the UI changes, leaving QA teams scrambling to fix tests instead of focusing on finding bugs. AI-driven solutions address this by replacing fragile scripts with adaptive agents (like those powered by Playwright). These agents can navigate and generate tests dynamically, keeping test cases relevant without constant manual intervention.

Self-Healing Tests and Automatic Updates

One standout feature of AI in testing is self-healing. When UI elements change - like a button moving, a label being updated, or a new feature being added - AI can automatically update the affected test cases. This eliminates the need for manual script rewrites. By doing so, self-healing not only saves time but also directly supports faster release cycles and reduces risks.

For example, between September 2024 and May 2025, researchers tested the AI-powered tool Cleverest on 22 commits across three programs: Mujs, Libxml2, and Poppler. In most cases, Cleverest generated bug-reproduction test cases in under three minutes by analyzing code changes and using a feedback loop. Additionally, modern AI platforms efficiently manage test infrastructure, allowing teams to scale their test suites without adding extra DevOps overhead.

Integration with CI/CD Pipelines

AI also enhances test stability by integrating seamlessly into CI/CD workflows. With AI-powered regression testing, test suites are triggered automatically with every commit or pull request. These systems analyze code changes and historical patterns to prioritize the most relevant tests, cutting down the time spent running unnecessary ones. Results - including screenshots, logs, and even video - are shared directly through tools like GitHub and Slack, ensuring developers get quick, actionable feedback.

"Now things are pretty confident on having things go out same day once test flows have run."

- Jonas Bauer, Co-Founder and Engineering Lead at Upside

Self-healing locators further enhance stability by preventing brittle test failures caused by UI updates, which are common in traditional CI/CD pipelines. Combined with automated triage, this ensures developers are alerted only to genuine issues, helping maintain high development speed without sacrificing quality.

How Ranger Uses AI for Regression Testing

Ranger takes the potential of AI in regression testing and shapes it into a platform that meets the demands of today’s development teams. By combining automated test generation with expert human oversight, Ranger delivers reliable results that help teams roll out features faster. Here's a closer look at how Ranger incorporates AI-driven test automation into its workflow to enhance QA processes.

Automated Test Creation and Updates

With Ranger, AI steps in to handle the heavy lifting of test creation. It automatically generates tests based on code changes, removing the need for manual scripting. As your application evolves, the platform adapts by creating new test scenarios and includes self-healing capabilities. This means tests can automatically adjust when UI elements are updated, ensuring your test coverage stays thorough without requiring constant manual intervention.

AI Testing with Human Review

Ranger employs a "cyborg" approach, where AI generates test code, and QA experts step in to review and refine it. This collaboration ensures that the tests are both reliable and easy to maintain. Human oversight is key to catching subtle issues that might slip past pure automation, making sure the tests align with high-quality standards. The platform also streamlines failure triage, filtering out flaky tests and environmental noise. As a result, engineering teams only get alerts for critical issues and genuine bugs.

"Ranger has an innovative approach to testing that allows our team to get the benefits of E2E testing with a fraction of the effort they usually require." - Brandon Goren, Software Engineer at Clay

Integration with Development Tools

Ranger seamlessly integrates with tools like GitHub and Slack, triggering tests with every commit and sharing results directly in pull requests. Real-time Slack notifications keep stakeholders informed about critical issues as they arise.

Beyond integration, Ranger manages the testing infrastructure by running tests in staging and preview environments. This supports CI/CD workflows, helping teams maintain their speed while catching bugs before they hit production.

Conclusion

AI-powered regression testing is transforming how software teams work by speeding up cycles, expanding test coverage, and cutting costs. By automating repetitive tasks and adapting to code changes, these tools streamline testing processes. Features like self-healing tests reduce the need for constant maintenance, while predictive analytics help identify defects early - keeping pace with the rapid demands of modern development.

Consider this: By 2025, AI testing tools are projected to account for 40% of central IT budgets and replace 50% of manual testing efforts, executing thousands of tests in just minutes. The result? Faster releases and better-quality software.

Take Ranger, for example. This platform automates test creation and updates while incorporating human review to ensure reliability. Teams using Ranger report noticeable improvements in their confidence to release updates. Jonas Bauer, Co-Founder and Engineering Lead at Upside, shared:

"I definitely feel more confident releasing more frequently now than I did before Ranger. Now things are pretty confident on having things go out same day once test flows have run."

This shift to AI-powered testing isn’t just about speed - it’s about creating better software with fewer resources. By reducing the time spent on maintenance, teams can focus on innovation. They can catch bugs before users do and release new features with confidence. As development accelerates and applications become more complex, AI-driven testing is no longer optional - it’s essential for maintaining quality and efficiency.

FAQs

How does AI enhance the accuracy of regression testing?

AI improves the reliability of regression testing by automating the process of fixing broken tests and cutting down on false positives. Through machine learning, it reviews historical data to spot and reduce flaky test results, leading to more dependable outcomes and a stronger sense of trust in your testing process.

By simplifying test maintenance and boosting consistency, AI frees up teams to concentrate on delivering high-quality software more efficiently and with fewer interruptions.

What are the cost advantages of using AI for regression testing?

AI-driven regression testing slashes costs by automating test updates, reducing false positives, and limiting the need for manual work. This streamlined process can cut maintenance efforts by an impressive 70–95%.

On top of that, AI catches bugs earlier in the development cycle, sparing teams from costly fixes down the road. For enterprises, this can translate to millions in annual savings while delivering faster and more dependable software releases.

How does AI improve testing efficiency in CI/CD pipelines?

AI is transforming CI/CD pipelines by making testing faster and more efficient through automation. It handles essential tasks like creating, prioritizing, and maintaining tests. By analyzing code changes, past data, and potential risks, AI identifies the most critical areas to test. This approach cuts down on unnecessary test runs and accelerates feedback loops.

Another advantage is the reduction of manual work. AI automatically updates test scripts to keep them functional when code or UI changes, which helps eliminate unreliable test results. It also simplifies test data management by generating synthetic data, protecting sensitive information, and providing data as needed. These features allow development teams to release high-quality software more quickly without disrupting their workflow.

%201.svg)

.avif)

%201%20(1).svg)