AI Test Data Management for CI/CD Pipelines

Managing test data in CI/CD pipelines can be a bottleneck, but AI offers a solution. Here's how AI-powered tools simplify test data management:

- Faster Data Access: AI generates and provisions datasets on-demand, cutting setup times by up to 70%.

- Data Consistency: It ensures tests run on accurate, production-like data, reducing flaky tests and false positives.

- Compliance: AI detects and masks sensitive information automatically, meeting regulations like HIPAA and GDPR.

- Efficiency: Synthetic data generation and smart subsetting replace slow, manual processes, saving hundreds of hours annually.

- Integration: Tools like Jenkins and GitHub Actions can trigger AI-driven data provisioning directly within pipelines.

Core Concepts in Test Data Management for CI/CD

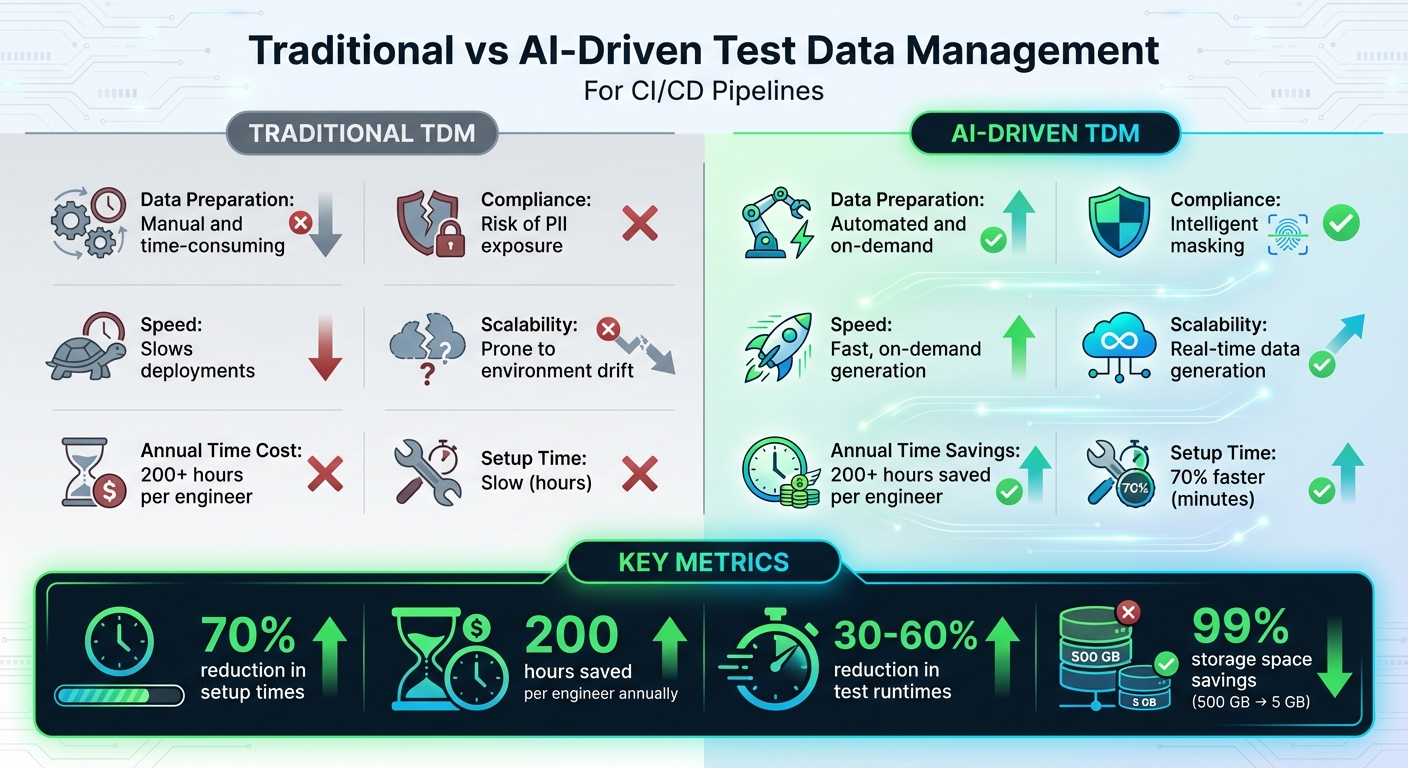

Traditional vs AI-Driven Test Data Management in CI/CD Pipelines

Test data management (TDM) plays a critical role in ensuring that every stage of your CI/CD pipeline operates with realistic, secure data - without slowing down deployment timelines. When implemented effectively, TDM allows unit tests, integration tests, and pre-production validation to run on data that mirrors real-world scenarios. This approach avoids exposing sensitive information while maintaining efficiency.

Test Data Needs Across CI/CD Stages

Each stage of the CI/CD pipeline has distinct data requirements. For unit testing, lightweight synthetic or mocked data is used to validate isolated code changes. These tests run quickly - often in seconds - because they focus on specific functions without relying on external systems.

Integration testing, on the other hand, demands more complex datasets. At this stage, the data must simulate real-world interactions between components. It’s used to verify API communications, data flows, and service dependencies under realistic conditions. By provisioning data on demand, setup times can be reduced by up to 70%.

When it comes to pre-production validation - which includes end-to-end and performance testing - you need data that closely resembles production environments. This stage identifies bottlenecks, environment mismatches, and edge cases that only emerge under full-scale loads. Without realistic data volumes, teams risk releasing features that falter under real user traffic. Unfortunately, traditional methods often fail to meet these dynamic requirements.

Challenges with Traditional TDM Approaches

Traditional TDM methods often fall short in keeping up with the fast-paced demands of CI/CD pipelines. One major issue is manual preparation. Engineers frequently spend hours cloning production databases, anonymizing fields, and refreshing environments before each test cycle. This repetitive work can eat up more than 200 hours per engineer annually, pulling focus away from development.

Another challenge is outdated data. Static datasets quickly become obsolete, failing to reflect recent code changes or updates in production. This can lead to environment drift - where test environments no longer align with reality. As a result, tests may pass in staging but fail in production, or worse, miss critical bugs entirely. These false negatives can delay releases and reduce trust in automated testing.

Compliance risks further complicate the situation. Using raw production data can inadvertently expose sensitive information such as personally identifiable information (PII) hidden in unstructured fields like free-text notes or JSON blobs. Manual masking often misses these details, creating potential violations of regulations like HIPAA, GLBA, and state privacy laws. On the flip side, overly aggressive masking can strip away the relationships needed to catch real bugs during testing. These issues highlight the pressing need for an automated, AI-driven approach to TDM.

| Aspect | Traditional TDM | AI-Driven TDM |

|---|---|---|

| Data Prep | Manual and time-consuming | Automated and on-demand |

| Compliance | Risk of PII exposure | Intelligent masking |

| Speed | Slows deployments | Fast, on-demand generation |

| Scalability | Prone to environment drift | Real-time data generation |

AI-driven TDM offers a solution to these challenges by automating data generation and compliance processes.

How AI-Driven TDM Solves These Issues

AI-driven TDM transforms the test data lifecycle by automating key processes. It handles PII masking and generates synthetic data that retains critical relationships, ensuring compliance while providing realistic test scenarios. This approach allows integration tests to validate business logic without risking sensitive data exposure.

Through synthetic data generation, AI creates targeted datasets on demand, eliminating the need to copy entire production databases. By analyzing pipeline data, code changes, and historical test outcomes, AI can generate domain-specific data at runtime. This minimizes dependence on static datasets. For example, in a Jenkins-based CI/CD pipeline, AI can automatically mask PII and produce synthetic data that mirrors production statistics, reducing test flakiness and resolving mismatches.

On-demand provisioning integrates seamlessly into CI/CD workflows via APIs and YAML configurations. When a build triggers, AI generates the exact data required for that stage - no manual effort needed. Feedback loops analyze test failures and performance metrics to continuously refine the data generation process, optimizing future runs. This approach speeds up test cycles by 70%, enabling production-grade environments to be ready in minutes.

One example of this approach is Ranger, an AI-driven solution that integrates directly into CI/CD pipelines to deliver scalable and compliant test data management (https://ranger.net).

AI Capabilities for TDM in CI/CD Pipelines

AI is transforming test data management (TDM) by introducing advanced features like synthetic data generation, automated masking, smart subsetting, on-demand provisioning, and feedback-driven optimization. These tools not only streamline CI/CD processes but also tackle challenges related to speed, accuracy, and regulatory compliance. Let’s dive into how these capabilities are reshaping TDM in CI/CD pipelines.

Synthetic Data Generation for Realistic Testing

AI-powered tools can create synthetic data by analyzing patterns, correlations, and edge cases in existing datasets, all while ensuring sensitive information isn’t duplicated. This allows teams to simulate realistic test scenarios without compromising privacy.

For instance, teams working in industries like healthcare, fintech, or e-commerce can configure domain-specific schemas. A payments application, for example, might generate synthetic transaction streams that use U.S. addresses, ZIP codes, and dollar formats. This enables robust load and fraud testing without exposing real cardholder data.

One standout benefit is the ability to test rare or extreme scenarios, such as fraudulent transactions or unusual user behaviors. Teams have reported a 30–60% reduction in test runtimes and better defect detection by focusing on edge cases. Privacy concerns are addressed by training on masked or tokenized data and validating outputs with metrics like uniqueness thresholds and membership inference checks. Additionally, audit logs and data catalogs ensure compliance with regulations like GDPR and CCPA.

Automated Data Masking and PII Protection

After generating data, automated masking secures sensitive information. AI algorithms use natural language processing (NLP), pattern recognition, and schema inference to identify and mask personal data. Techniques like deterministic tokenization and format-preserving encryption keep the data usable for testing while ensuring compliance with privacy laws.

This process can be integrated directly into CI/CD pipelines. For example, an AI classifier scans incoming data for sensitive fields, applies masking policies, and writes the masked data to test environments. Using policy-as-code, organizations can store masking rules in version control, enabling version tracking and better regulatory compliance. Comprehensive audit logs and access controls further simplify meeting audit requirements.

Smart Data Subsetting and Versioning

AI simplifies data subsetting by analyzing large datasets and creating smaller, targeted subsets that retain critical workflows. This approach reduces storage needs and test runtimes without sacrificing test coverage. For example, instead of replicating a 500 GB production database, AI might generate a 5 GB subset, saving up to 99% of storage space.

Teams can define dataset sizes for different CI/CD stages. Tiny subsets might be used for pull request validation, while larger subsets are reserved for nightly regression tests. Metrics like dataset size, execution time, and defect detection rates ensure the subsets remain effective. Subset definitions can be stored as metadata or "data-as-code" configurations (e.g., YAML) to ensure reproducibility and traceability.

On-Demand Data Provisioning for Pipelines

AI-driven TDM platforms provide APIs and CLI/SDK tools that integrate seamlessly with CI/CD tools like Jenkins, GitHub Actions, and GitLab CI. These tools can generate datasets on demand, streamlining test setup and reducing provisioning time by up to 70%.

For example, a pull request might trigger a pipeline that calls an AI TDM API to generate a small, high-risk subset of masked or synthetic data. This data is loaded into temporary test environments where unit and integration tests are executed before the environment is dismantled. Policy-based data selection ensures compliance - synthetic data might be used on public cloud runners, while masked data is reserved for internal systems. Platforms like Ranger connect directly to CI/CD pipelines and integrate with tools like GitHub and Slack to simplify and scale test data management (https://ranger.net).

Continuous Improvement Through Feedback Loops

AI systems use feedback loops to analyze test results, failure logs, and coverage reports, continuously refining test data strategies. For instance, if tests frequently fail on transactions above $10,000, the AI might generate more high-value transactions to improve coverage. It can also rebalance datasets to emphasize high-risk workflows or update PII detection when new data fields emerge.

These adjustments are often reviewed by QA experts to ensure alignment with domain knowledge before being fully implemented. This collaborative approach reduces test cycle times and minimizes production issues, while maintaining a human-in-the-loop to refine AI-generated suggestions and guide updates to testing strategies effectively.

sbb-itb-7ae2cb2

Implementing AI Test Data Management in CI/CD

Building on earlier discussions about traditional test data management (TDM) challenges, integrating an AI-driven approach can effectively address these inefficiencies.

Assessing Your Current Test Data Management Practices

Start by mapping the flow of test data across unit, integration, and end-to-end tests. Measure key metrics like provisioning times, failure rates, and the sources of your test data. Manual data setups can significantly slow down test cycles, and the presence of unmasked personally identifiable information (PII) introduces compliance risks.

To establish a baseline, track how long it takes to provision environments per pipeline run. Identify where your test data originates - whether from production copies, manual scripts, or seeded fixtures. Document key metrics like defect escape rates and incidents caused by poor or missing test data. Additionally, evaluate your compliance with regulations such as GDPR, CCPA, or HIPAA, especially if sensitive data is being used in test environments without proper masking.

These insights provide the groundwork for creating an AI-driven TDM strategy.

Designing an AI-Powered TDM Strategy

Decide when to use synthetic data versus masked production data. Synthetic data is ideal for testing new features and working in privacy-sensitive areas like healthcare or financial technology. Masked production data, on the other hand, works best for regression scenarios that require production-like conditions.

Define your data models and desired outcomes. For instance, if you're working on a U.S. payments application, you might need realistic transaction streams that include valid ZIP codes, dollar amounts, and edge cases like fraudulent transactions. Implement masking policies for sensitive information such as names, Social Security numbers, addresses, and credit card numbers. AI tools can help detect and obfuscate this data while maintaining realistic formats. Start by focusing on one or two high-impact pipelines, such as those for customer-facing web or mobile applications, and define clear success metrics like reduced setup times.

Once your strategy is ready, integrate AI TDM tools directly into your CI/CD workflows.

Integrating AI TDM into CI/CD Workflows

Connect AI TDM tools to your CI/CD platform using APIs or CLI tools. For platforms like Jenkins, GitHub Actions, or GitLab CI, configure pipelines to trigger TDM endpoints for on-demand data generation. For example, a pull request could initiate a workflow that generates a small, high-risk subset of masked data for testing.

Some tools, like Ranger, integrate seamlessly with GitHub and Slack to automate test creation and maintenance while connecting to CI/CD pipelines (https://ranger.net). For teams practicing trunk-based development, AI TDM jobs can be configured to run in parallel across multiple branches or microservices, ensuring that each pipeline run uses isolated data sets.

Ensuring Security, Compliance, and Scalability

To secure sensitive data, implement role-based access control (RBAC), maintain audit trails, and use ephemeral, encrypted environments.

Ensure your AI TDM tools offer data lineage capabilities, which track the origin of data and document any transformations applied - an essential feature for regulatory audits. To handle peak demand, design for horizontal scalability by running multiple TDM jobs in parallel and caching frequently used datasets to minimize bottlenecks during busy periods. Tools like Ranger combine AI with human oversight to validate data masking and test quality, reducing compliance risks while maintaining GitHub integration.

Monitoring and Evolving Your AI TDM System

Aggregate logs and trace distributed processes to quickly identify and resolve issues. Set up alerts for DevOps teams when data jobs slow down pipelines. Regularly review generated data for bias or gaps - such as underrepresented regions, currency ranges, or edge cases - and adjust your models as needed.

Hold quarterly or release-based retrospectives with QA, DevOps, and security teams to evaluate TDM performance. Update masking rules, data generation templates, and pipeline integration processes based on feedback. By leveraging AI insights, you can continuously improve your TDM system to meet the changing demands of your pipelines. Organizations implementing AI-powered test management in CI/CD have reported up to a 70% reduction in test cycle times and fewer production issues by consistently refining their systems.

Conclusion

Key Takeaways

AI-powered test data management is transforming CI/CD pipelines by removing traditional bottlenecks. Manual data requests, database cloning, and cross-team coordination often slow releases by hours or even days. In contrast, AI-driven TDM delivers realistic, compliant test data in just minutes, seamlessly integrated into pipelines through APIs. Companies using these systems have reported a 70% reduction in test cycle times and fewer production issues, thanks to quicker feedback loops and more dependable test results.

AI also automates sensitive data masking to comply with U.S. standards like CCPA, all while maintaining key data properties. Additionally, it learns from past test outcomes and production defects to refine test data, ensuring tests catch genuine issues rather than passing on invalid inputs. This leads to more consistent and reliable pipeline runs.

Ranger takes this a step further by offering AI-powered test creation with human oversight. It integrates with tools like GitHub and Slack to streamline test maintenance and deliver real-time updates. Customers have reported saving over 200 hours per engineer annually by automating repetitive testing tasks. Teams consistently ship features faster without compromising quality, highlighting how AI-driven tools can make an immediate impact.

Next Steps for Implementation

To harness these AI advancements, start by evaluating your current test data challenges. Measure how long it takes to provision test data, identify delays in releases, and assess any reliance on raw production data. Connect these findings to broader business metrics, such as deployment frequency and the costs tied to post-release defects, to establish a clear baseline.

From there, pinpoint bottlenecks like slow environment setups or data-dependent test cases. Pilot an AI-based TDM tool on a small set of tests, configuring automated masking and synthetic data rules specific to your domain. Integrate these into your CI/CD pipeline via APIs, and set up a human review process to allow QA and security teams to validate the generated data early. As the system stabilizes and delivers measurable ROI, gradually expand its coverage.

If you're searching for a solution that works seamlessly with your existing automation and CI/CD tools, consider platforms like Ranger. These tools can streamline your testing workflows and help maintain consistent quality as you scale.

FAQs

How does AI enhance test data management in CI/CD pipelines?

AI is transforming test data management within CI/CD pipelines by automating essential tasks such as test creation and upkeep. This automation not only cuts down on manual labor but also reduces the chances of errors, ensuring consistent and dependable testing throughout the development process.

By leveraging AI, teams can quickly pinpoint and resolve genuine bugs, saving valuable time and accelerating the release of new features. These AI-driven tools simplify workflows, allowing software teams to concentrate on delivering top-notch products with greater efficiency.

How does AI-driven test data management help with compliance?

AI-powered test data management (TDM) plays a key role in maintaining compliance by protecting sensitive information and adhering to regulations like GDPR and HIPAA. It automates critical tasks such as data anonymization and masking, which minimizes the chances of data breaches and helps avoid costly penalties for non-compliance.

With AI handling test data, teams can uphold strict security and privacy standards while reducing the need for manual intervention. This not only ensures regulatory compliance but also simplifies workflows, making processes more efficient.

How do AI-powered test data management tools work with CI/CD platforms like Jenkins?

AI-driven test data management tools, like Ranger, work seamlessly with CI/CD platforms such as Jenkins through APIs or plugins. These connections allow for automated test data provisioning, smooth test execution, and real-time result tracking directly within the pipeline.

Ranger also automates test creation and upkeep, enabling continuous testing and quicker bug detection. This ensures software quality remains high throughout the development process.

%201.svg)

.avif)

%201%20(1).svg)